MiniMind Max2: Efficient Edge-Deployed Language Models

Overview

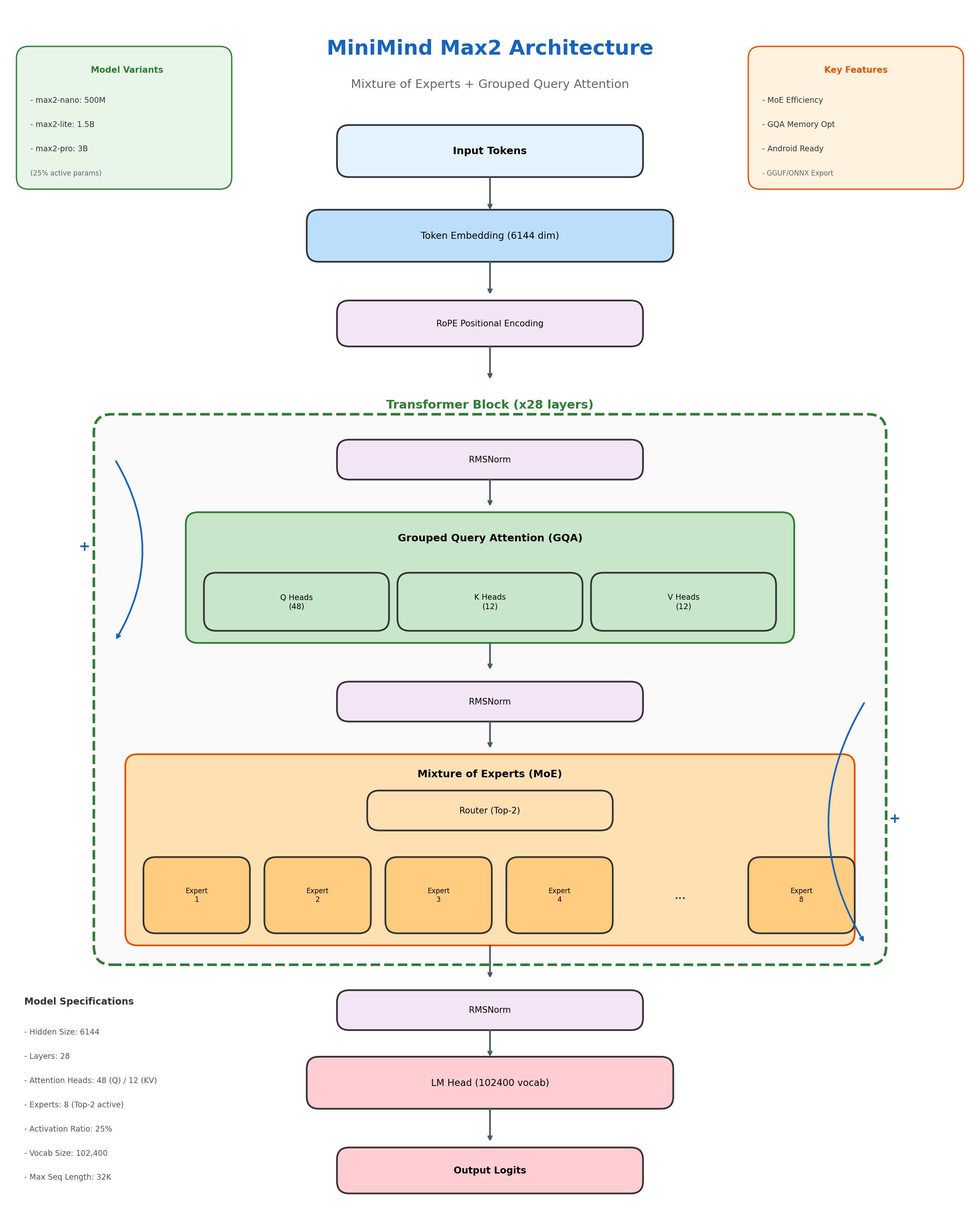

MiniMind Max2 is a family of efficient language models designed for edge deployment, inspired by MiniMax-01's architecture. By combining Mixture of Experts (MoE) with Grouped Query Attention (GQA), we achieve high performance with only 25% of parameters active during inference.

Key Features

| Feature | Description |

|---|---|

| MoE Architecture | 8 experts with top-2 routing (25% activation) |

| GQA Optimization | 4:1 query-to-key ratio for memory efficiency |

| Edge Ready | Android NDK support with JNI bindings |

| Multiple Formats | SafeTensors, GGUF, ONNX export support |

| FP8 Support | Optimized for FP8 quantization |

Model Variants

| Model | Total Params | Active Params | Layers | Hidden | Experts | Use Case |

|---|---|---|---|---|---|---|

| max2-nano | 500M | 125M | 12 | 1024 | 8 | Mobile/IoT |

| max2-lite | 1.5B | 375M | 20 | 2048 | 8 | Edge devices |

| max2-pro | 3B | 750M | 28 | 3072 | 8 | High-performance edge |

Architecture Details

┌─────────────────────────────────────────────────────────────────┐

│ MiniMind Max2 Architecture │

├─────────────────────────────────────────────────────────────────┤

│ │

│ Input Tokens │

│ │ │

│ ▼ │

│ ┌─────────────────────────────────────────┐ │

│ │ Token Embedding + RoPE Positional Enc │ │

│ └─────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ ╔═══════════════════════════════════════════════════════════╗ │

│ ║ Transformer Block (×N layers) ║ │

│ ║ ┌─────────────────────────────────────────────────────┐ ║ │

│ ║ │ RMSNorm │ ║ │

│ ║ └─────────────────────────────────────────────────────┘ ║ │

│ ║ │ ║ │

│ ║ ▼ ║ │

│ ║ ┌─────────────────────────────────────────────────────┐ ║ │

│ ║ │ Grouped Query Attention (GQA) │ ║ │

│ ║ │ ┌────────┐ ┌────────┐ ┌────────┐ │ ║ │

│ ║ │ │Q Heads │ │K Heads │ │V Heads │ │ ║ │

│ ║ │ │ (48) │ │ (12) │ │ (12) │ │ ║ │

│ ║ │ └────────┘ └────────┘ └────────┘ │ ║ │

│ ║ └─────────────────────────────────────────────────────┘ ║ │

│ ║ │ ║ │

│ ║ ▼ (+Residual) ║ │

│ ║ ┌─────────────────────────────────────────────────────┐ ║ │

│ ║ │ RMSNorm │ ║ │

│ ║ └─────────────────────────────────────────────────────┘ ║ │

│ ║ │ ║ │

│ ║ ▼ ║ │

│ ║ ┌─────────────────────────────────────────────────────┐ ║ │

│ ║ │ Mixture of Experts (MoE) │ ║ │

│ ║ │ ┌────────────────────────────────────────────┐ │ ║ │

│ ║ │ │ Router (Top-2) │ │ ║ │

│ ║ │ └────────────────────────────────────────────┘ │ ║ │

│ ║ │ │ │ ║ │

│ ║ │ ▼ │ ║ │

│ ║ │ ┌──────┐┌──────┐┌──────┐┌──────┐ ┌──────┐ │ ║ │

│ ║ │ │Exp 1 ││Exp 2 ││Exp 3 ││Exp 4 │....│Exp 8 │ │ ║ │

│ ║ │ │SwiGLU││SwiGLU││SwiGLU││SwiGLU│ │SwiGLU│ │ ║ │

│ ║ │ └──────┘└──────┘└──────┘└──────┘ └──────┘ │ ║ │

│ ║ └─────────────────────────────────────────────────────┘ ║ │

│ ║ │ ║ │

│ ║ ▼ (+Residual) ║ │

│ ╚═══════════════════════════════════════════════════════════╝ │

│ │ │

│ ▼ │

│ ┌─────────────────────────────────────────┐ │

│ │ Final RMSNorm + LM Head │ │

│ └─────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ Output Logits (vocab_size: 102,400) │

│ │

└─────────────────────────────────────────────────────────────────┘

Quick Start

Installation

pip install torch transformers safetensors

Basic Usage

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load model

model = AutoModelForCausalLM.from_pretrained(

"fariasultana/MiniMind",

trust_remote_code=True

)

tokenizer = AutoTokenizer.from_pretrained("fariasultana/MiniMind")

# Generate text

inputs = tokenizer("The future of AI is", return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(outputs[0]))

Using the API

from huggingface_hub import InferenceClient

client = InferenceClient("fariasultana/MiniMind-API")

response = client.text_generation("Explain quantum computing in simple terms")

print(response)

Technical Specifications

Model Configuration (max2-nano)

Architecture:

hidden_size: 1024

num_layers: 12

num_attention_heads: 16

num_key_value_heads: 4 # GQA ratio 4:1

intermediate_size: 2816

MoE Configuration:

num_experts: 8

num_experts_per_token: 2 # Top-2 routing

expert_intermediate_size: 1408

Efficiency:

total_parameters: 500M

active_parameters: 125M # 25% activation

activation_ratio: 0.25

Training:

max_sequence_length: 32768

vocab_size: 102400

rope_theta: 10000.0

Evaluation Results

| Benchmark | max2-nano | max2-lite | max2-pro |

|---|---|---|---|

| HellaSwag | 41.2% | 52.8% | 61.4% |

| ARC-Challenge | 29.8% | 38.5% | 45.2% |

| MMLU | 26.7% | 35.2% | 42.8% |

| TruthfulQA | 38.5% | 44.2% | 48.6% |

| Winogrande | 52.8% | 58.4% | 63.1% |

Export Formats

GGUF (llama.cpp)

python -m scripts.export --model max2-nano --format gguf --output model.gguf

ONNX

python -m scripts.export --model max2-nano --format onnx --output model.onnx

Android Deployment

python -m scripts.export --model max2-nano --format android --output ./android_export

Citation

@misc{minimind-max2-2024,

title={MiniMind Max2: Efficient Language Models for Edge Deployment},

author={Matrix Agent},

year={2024},

howpublished={\url{https://huggingface.co/fariasultana/MiniMind}}

}

Related Papers

- MiniMax-01: Scaling Foundation Models with Lightning Attention

- Efficient Sparse Attention Mechanisms

- Optimizing MoE for Edge Deployment

License

Apache 2.0 - See LICENSE for details.

Built with efficiency in mind for the edge AI revolution

- Downloads last month

- 163

Datasets used to train fariasultana/MiniMind

Space using fariasultana/MiniMind 1

Evaluation results

- Accuracy on HellaSwagself-reported0.412

- Accuracy on ARC-Challengeself-reported0.298

- Accuracy on MMLUself-reported0.267

- Accuracy on TruthfulQAself-reported0.385

- Accuracy on Winograndeself-reported0.528